In this note I’d like to try something different: I’m calling it “odd bits of tacit knowledge.”

They say to write what you know. But how do you communicate things you know that are more feel, more the patterns and models you find yourself acting on practically, than any sort of calcified formal scientific knowledge?

One way of describing this is as tacit knowledge:

…knowledge that is difficult to express or extract; therefore it is more difficult to transfer to others by means of writing it down or verbalizing it. This can include motor skills, personal wisdom, experience, insight, and intuition.

In my world, a useful instance of this is Tom Kalil’s paper, Policy Entrepreneurship at the White House: Getting Things Done in Large Organizations.1 Another is Marina Nitze and Nick Sinai’s recent book, Hack Your Bureaucracy.

Another useful frame comes from James C. Scott in what he calls metis:

a wide array of practical skills and acquired intelligence in responding to a constantly changing natural and human environment…the practices and experiences of metis are almost always local…applicable to similar but never identical situations…[Metis] resists simplification into deductive principles which can successfully be transmitted through book learning, because the environments in which it is exercised are so complex and non-repeatable that formal procedures… are impossible to apply2

This gives you a sense of the kind of knowledge I’m gesturing towards. Not rules, but heuristics. Not direction, but patterns to consider and see if they fit in a given context.

So I’m going to attempt to write down some of this. Per Patrick McKenzie, a lot of this is the sort of thing I find myself saying to a fellow practitioner, or emailing back to someone who asks me for advice. My thought is, if it was useful enough to share more than once in this way, maybe it’s useful more broadly.3

Some odd bits of tacit knowledge, volume 1

My domain is generally improving the technology and experience of government safety net programs, with some extra practical depth in SNAP and UI. Here are some things that I think I know.

The 3 legs of the stool: instrumentation, user complaints, and user observation

(a.k.a. the first thing I tell people to do when they’re looking to improve any online service or transaction)

Because I’m known for improving an online application for benefits, a lot of people come to me in a similar position. What I say to do has become fairly consistent over time.

Distilled, what I say is you need situational awareness of the actual problems before changing anything.

To operationalize this, I consistently recommend 3 concrete steps:

(1) Find or add metrics/instrumentation in the online service. For example, in an application, of those who start what % successfully submit at that point? How long does it take to complete the transaction? These are usually gettable to some extent via Google Analytics, and given how well trodden it is, I usually say add that if it’s not there already because it’s usually lightest lift. But you can do this with plenty of other application monitoring tools (New Relic, AppDynamics) that provide more granularity if you can get it done.

Often metrics or instrumentation like this is fully missing. Unfortunately then step 1 becomes adding it. But again, it’s usually not a massive lift. Other times, IT has this but no one on the program side realizes this. So asking kindly and curiously in a meeting with both yields this as possible.

Tactical tip: you can look at the HTML source of any web page and see if either Google Analytics or one of many similar tools is installed.

(2) Find (and add escape hatches for) user complaints. Figure out where users complain when something is not working. Often in my world there is some sort of helpdesk email address. There’s always a call center that people call when they have tech issues. Appeals or other formal program channels of problems occasionally have this, but often less. Facebook and Reddit groups often have people posting screenshots these days, as does Twitter (look for mentions of the agency account.) Find these complaints. They are more specific and actionable.

(3) Observe real users using the system. Virtually no one disagrees this is not useful. But many disagree that it is worth the hassle (legally, logistically) to do it. But it has to be done because that’s where all the texture of the metrics comes from. You can’t generally guess at what a problem is from metrics, and while complaints are better, they have less fidelity than a short video of a person’s specific problem.

Sometimes people try to build this into a procurement as an early phase (e.g. discovery.) I tend to think this needs to be done beforehand, because too much of an RFP scope will otherwise make assumptions uninformed by what this info can provide. An advantage of this is it’s almost always much, much less effort than even writing a proposed scope or RFP for a technology overhaul. That can be a useful advantage.

Imagined problems and executive summary drift

Many UX/CX efforts start with high level problem definitions (“the web site is hard to use.”) I, personally, have a strong distaste for these. They’re not well-defined or actionable.

What’s worse, such high level summary problems tend to lead people to imagine problems based on pattern recognition. “This other team found their application was burdensome, so I bet this one is too.” But the specifics in a given context are what matter.

And there’s a structural aspect to this too: all of government runs on executive summaries (and summaries of summaries.) So you start with Bill had to click 6 times to get from screen A to screen B and you end up with a polished Powerpoint deck containing journey maps and a big bullet that reads the application is not user-friendly. This summary is to some extent an imagined problem, rather than a specific one.

The need for summaries necessarily creates a drift back to imagined problems. And it’s dangerous, but especially so in the context of buy-in to the idea (e.g. CX) but much more tactical disagreement with the actual actions required. At equilibrium, this generates decks and press pieces on how user experience is great, announcement of new “single stop” projects, etc.

Aside: I think this phenomenon is more generally connected to the political economy of small problems. Most UX/CX is not some big thing (exception: the site is always down) but instead lots and lots of little things. It’s hard to agenda-set on a long tail of little things. Political upside is low and legibility is low.

Starting with implementation support, not an attempt at agenda setting

Many times people with a technology or user experience come into government, it is not in a context where the top organizational priority is improving along those dimensions.

A strategic error I’ve seen is when such people try to immediately make their focus the top of the agenda. This tends to backfire and alienate others for many reasons, not least of which is that the power to set the agenda is much more formal and defined in government than it is in, say, a technology company.4

My preferred approach is to just take as table stakes whatever the top priority is, and figure out some way to be helpful. Often the shape this takes is sitting in meetings and asking genuine curious questions aligned with the leadership goals, but which I notice no one else is asking. (Again, there are good reasons.) And then from that, working out some approaches that are practically doable by the team/org as-is, but which were not the approach before I arrived.

With some value added implementing an existing agenda item, I start to accrue tokens as a helpful, friendly person who can be come to for other questions. Eventually, iterated enough, that tends to get into agenda setting.

If you want to tackle friction, work on the source of friction

Many people want to improve experience. But the negatives of some experience are almost always coming from somewhere. Going to that somewhere and working on that sometimes gives far more outsized leverage than working on an initiative to reduce friction in the abstract. Often this is because the latter is newer and less established, whereas the former is in the institutionalized core.

Many people shy away from such areas as payment accuracy, QC, etc. but you can address many problems at the source! The key tradeoff is you’re also working on things you might not see as your #1 priority, but that’s to each their own.

So there it is: a bit of tacit knowledge. I hope it helps, but it might not!

An example of how fraudsters are trying to steal EBT recipients’ identities

Lastly, a little potpourri item.

I’m a bit of a weirdo. I spend a lot of time on social media spaces where people help each other out with government benefits. It’s a great source of ground truth, and also, well, helping people directly is a useful recharge when you work many abstracted levels away the rest of the day.

I also come across scammers and criminals doing bad things. Here’s one instance of that, to show some color as to the mechanics of some of these issues.

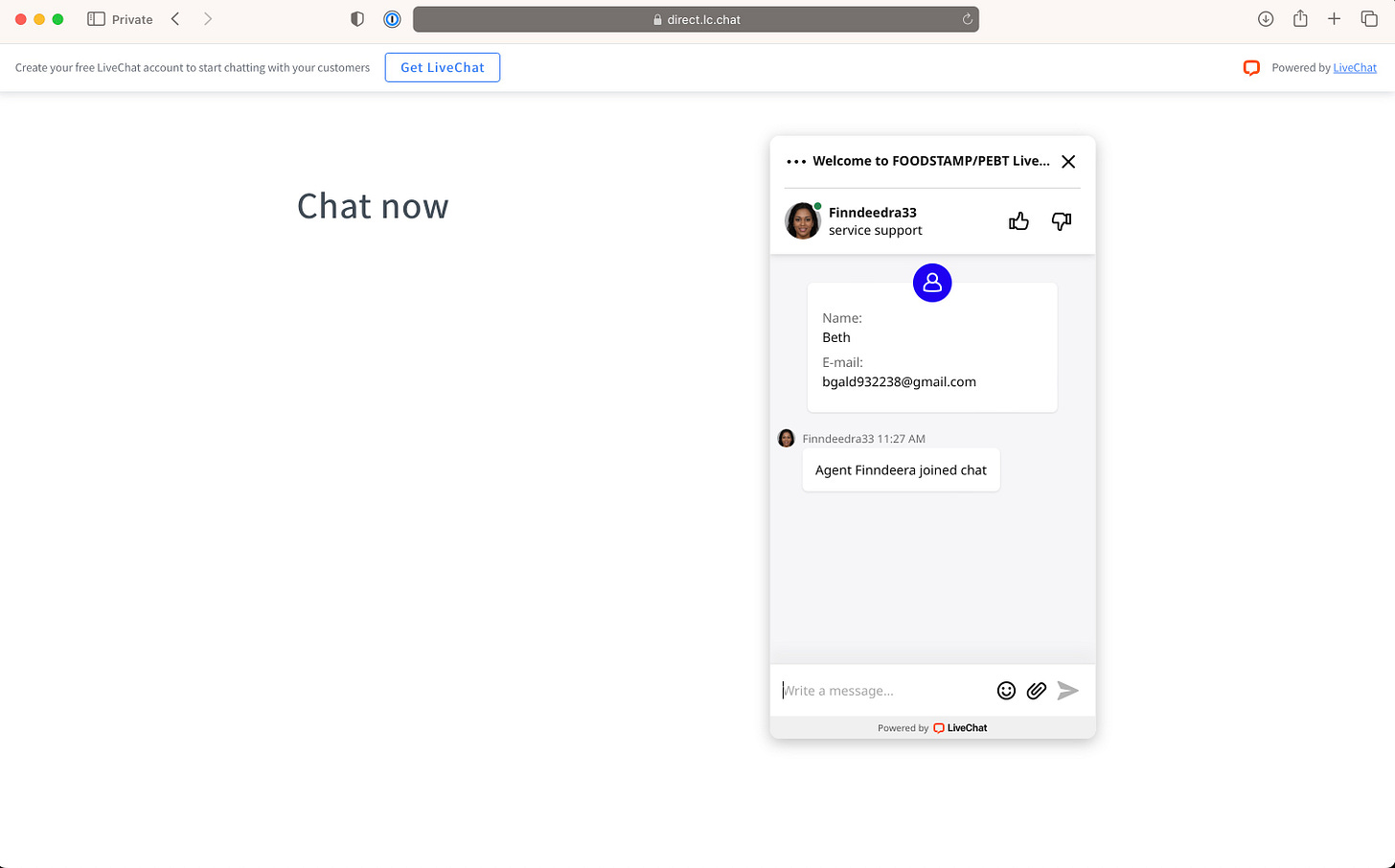

On a Facebook group, a (presumably fake) account posted this:

As it says, this is a “direct.lc.chat” URL, which is the hosted service for Livechat.com, which offers the ability to run live chat support services.

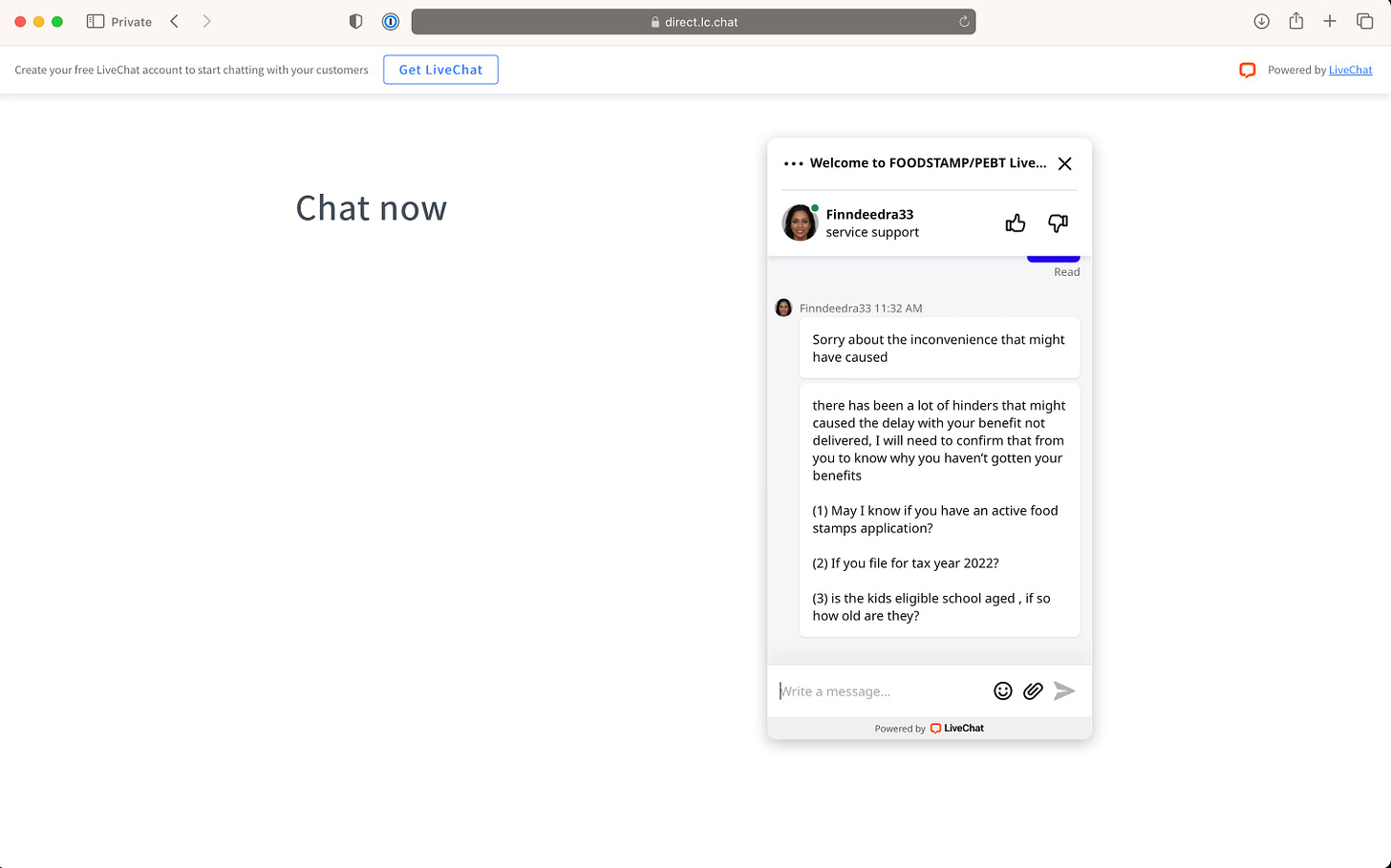

I put in some dummy info to start a chat and see what it did. They then ask some questions:

Notice from the questions that they appear to be trying to get dimensions to understand how they can use my identify information once they get it from me. Here, I believe #1 and #3 would be EBT card fund theft, and #2 would likely be filing taxes using my SSN to steal a refund.

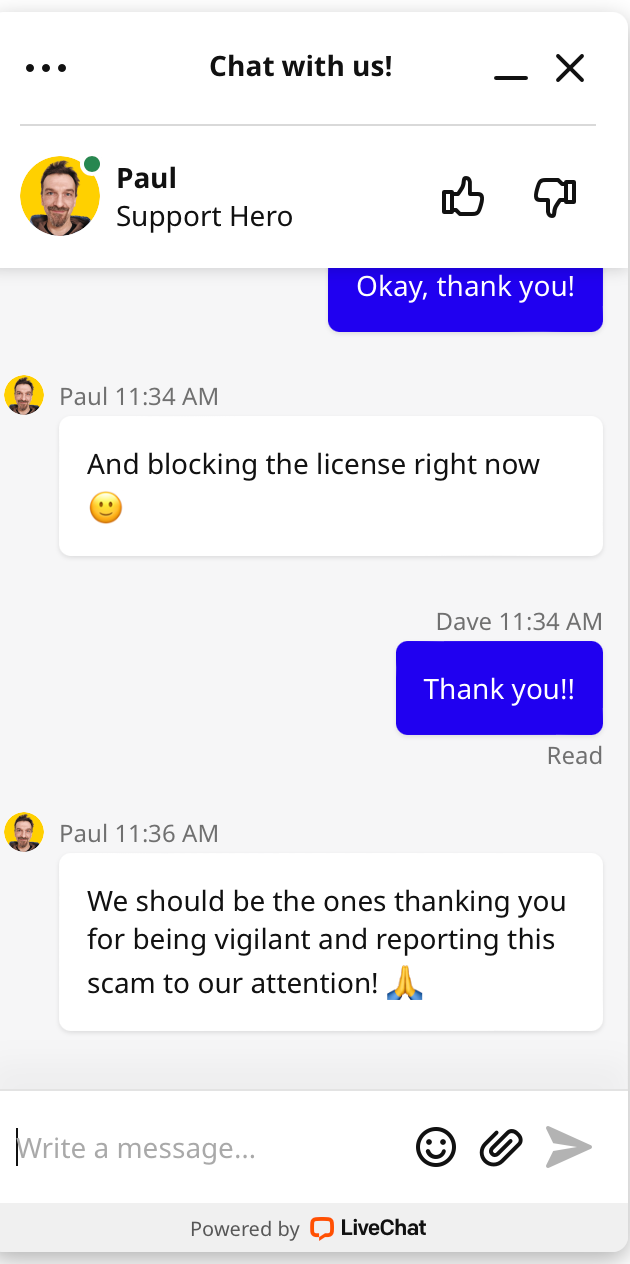

Next, of course, they say they need to verify my identity. (This is when I stopped, and contacting Livechat.com directly to have it taken down.)

I’ve seen this particular scam approach twice now, and I want to give major credit to Livechat.com’s support team that took it down quickly both times.

Thanks Paul!

This piece had ~35% resonance before moving more formally inside government, and after that experience inside is now at ~70% resonance. I now share it routinely with those new to a government agency.

I am indebted to this page for gathering many of these distilling excerpts http://makecommoningwork.fed.wiki/view/metis

No warranties on metis, though!

…though not all tech companies! Particularly large tech co’s very quickly start to more resemble government than startups. In conversations, I have found more common ground with employees at Google and Facebook than most other categories.

Great article, the Kalil paper link appears to not work, FYI.

> I think this phenomenon is more generally connected to the political economy of small problems. Most UX/CX is not some big thing (exception: the site is always down) but instead lots and lots of little things. It’s hard to agenda-set on a long tail of little things. Political upside is low and legibility is low.

This resonates a lot coming from a tech company background too! Two thoughts I have based on my experiences around this, neither of which fully address the issues, but are techniques I've seen work reasonably well to make progress.

1. One theory I have is that these are best addressed through culture rather than planning. If the culture has high standards, it makes it harder to ship low quality stuff. (This only goes *so* far, obviously, but I saw at Dropbox how a culture of quality in, e.g., hiring, was incredibly powerful in maintaining a rigorous hiring process in the face of a competing need to hire quickly.)

2. Another technique that I've seen is to provide a fixed time budget to product teams that is meant to be spent on quality issues. If something feels subjectively "low quality", that gives leaders a way to say "I want to invest in this" without having to micro-manage the specifics of "what needs to be done". This is also useful for technical debt. (For example, you can say that teams should spend 20% of time on quality and customer issues, 20% of time on technical debt, etc, and then that creates space for each of those specific constituencies at the local level to know that the time is protected.) Of course, this only works if the culture is willing to support that kind of investment. At Dropbox, we actually had a standard 60/20/20 framework that we asked teams to align to during our roadmapping process. (There are some downsides to this approach, particularly if you get into too much detailed accounting of the work, but it is a nice way to help, e.g., product management, engineering and other strategic functions talk about this kind of work without having to mis-micro-manage it.)