Kernels 02: Claude Compadre (Claude Code for non-coding things)

Also: is curiosity all you need?

This is the second edition of “kernels”: scratch notes and seed thoughts from the past few weeks that I think are worth sharing; maybe to grow out into richer text in the future.

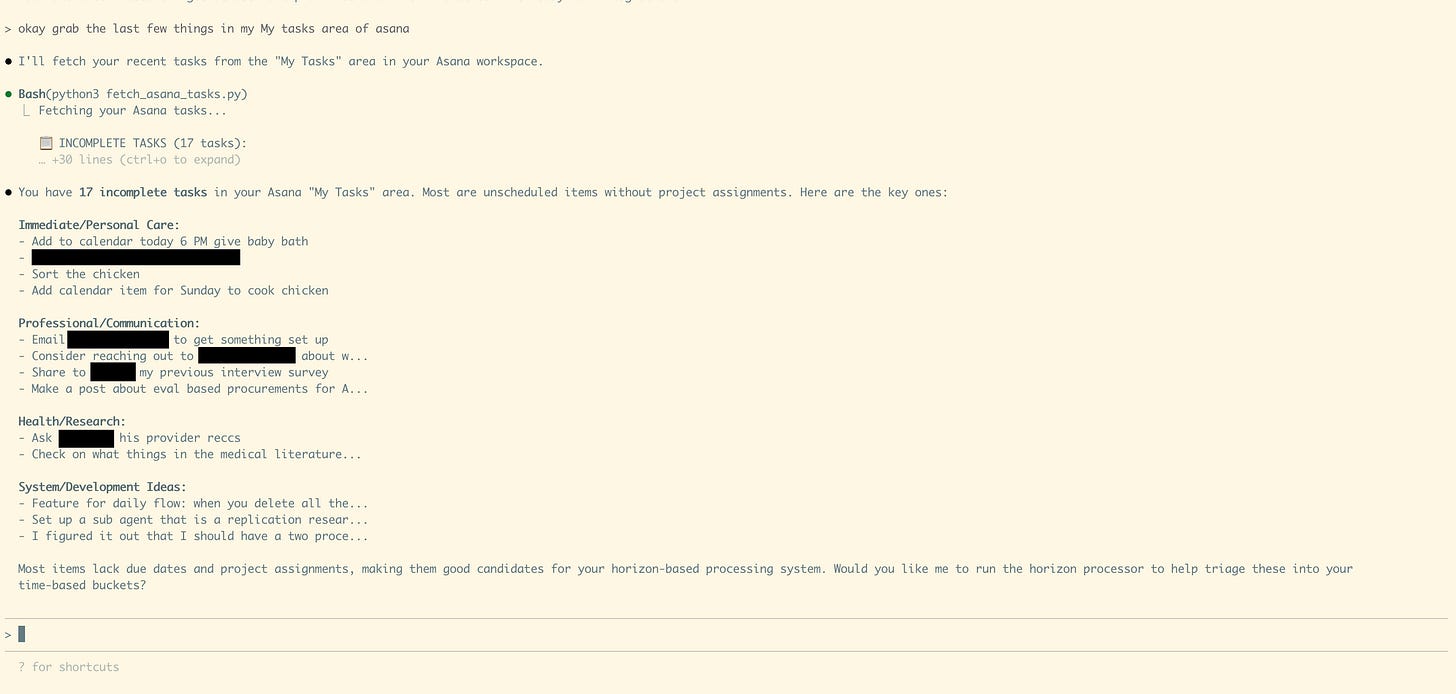

“Claude Compadre”

Using Claude Code for non-coding work and projects has been a profound difference in my experience of AI capabilities

I’ve come to call this pattern “Claude Compadre” — when you’re full-on in the terminal using Claude Code, but your work is decidedly not building a software project (the name’s a bit cheeky, sure, but things need names)

The major difference in my experience is from a few factors

1. I have it write files (usually simple Markdown) constructing memory and meaning and sensemaking as it goes

2. We’re in an iterated conversation that serves as a feedback loop on it, carving it continuously (and past the context window limit — again, because it can write locally)

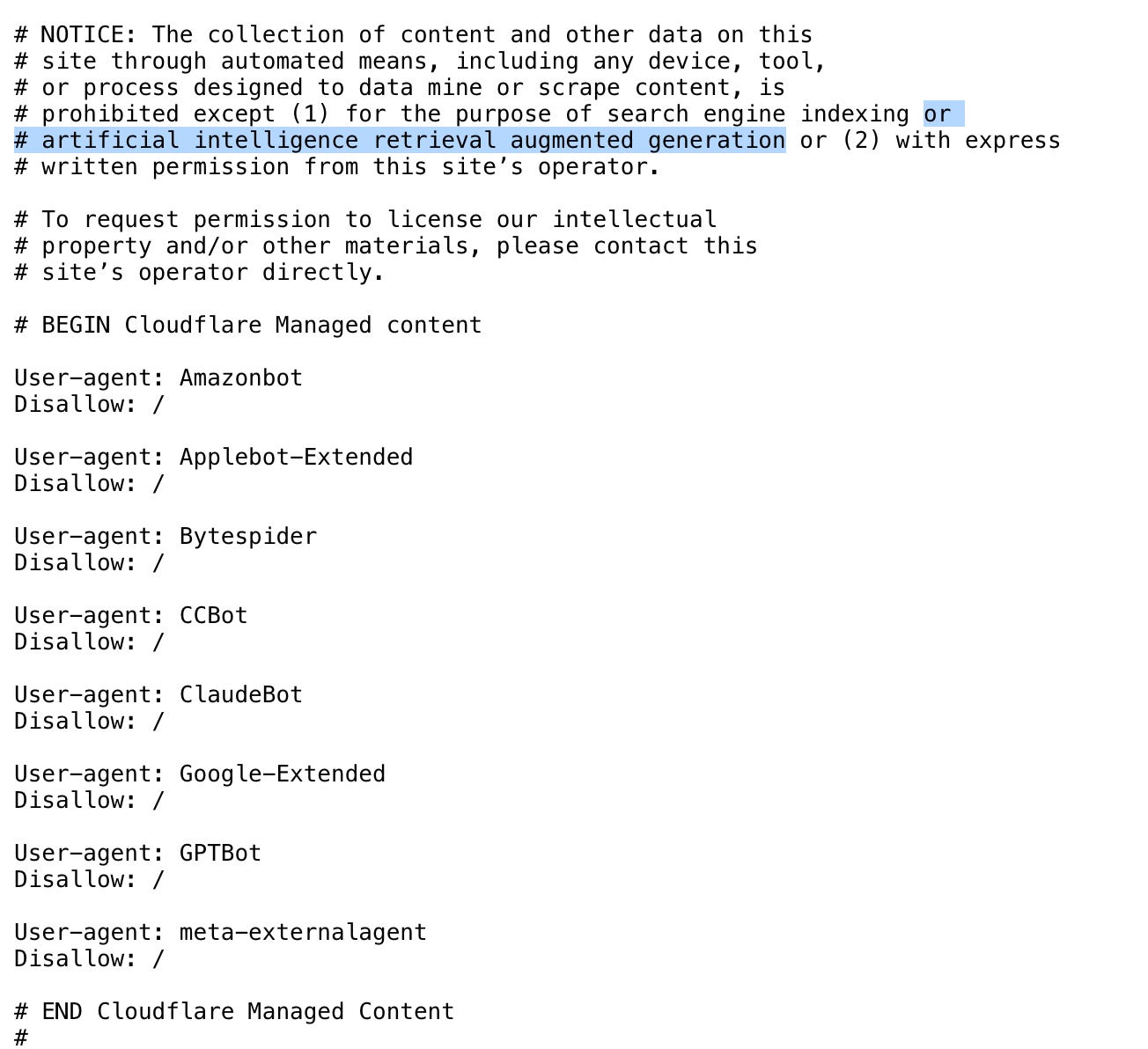

3. By being a programming tool at its core, if I need some capability, I can simply tell it to grow that capability — recent examples include an ad hoc CLI for my own personal Asana; web scrapers for SNAP policy manuals on state websites with funky navigation

This has become my default way of using AI in both work and at home, and it’s something I want to encourage more people to try.

If I’ve had a “feeling the AGI” moment that is qualitatively different as an experience so far — it has been this.

I also think this way of using models is helpful because it keeps the context yours — in local files on your computer — rather than the model provider company’s. Being able to remain model-agnostic — while not holding oneself back — strikes me as very important, at a personal level.

If you’re not a programmer (confession: I am) that in fact should not hold you back. Here’s two ways to approach this:

A. Use the Claude app to walk you through getting set up with Claude Code. Get stuck? Ask Claude in your more comfortable setting!

B. When you get Claude Code going, create a custom “output style” where you explicitly tell it the style should have the model interact with you as a non-technical, non-programmer and explain everything — including risks — when prompting you to allow any action.

I want to see more experiments — let’s do some streams! — of people without programming backgrounds using Claude Code, perhaps with gentle guidance “pairing” with someone who can grease the wheels of terminal friction.

Other tips from my experiments in Claude Compadre land so far

Modify the Claude.md file with all your guidance on how you want it to operate

Have it write local Markdown files to save knowledge it gathers

Use Opus (or Opus Planning and switch into Planning mode) for the big picture strategic stuff, then let Sonnet drive minute-by-minute

A fun side project: having a podcast or Substack where each week I (or a motivated reader!) sat down with a niche domain expert in some field, and walked through testing AI models on their particular area of expertise.

Could even be that it’s an edited-down recording of collaboratively building an eval, and comparing models on this domain.

Connected to this…

A potential pattern: whether something succeeds or fails is in some ways less interesting than the particular failure mode you can observe.

I think you can see this across fields in different ways.

For example: I’ve often found critiques of econ grounded in “but they’re assuming everyone is rational! that’s so wrong!” to utterly miss the point.

The point of that methodological approach is that it forces you to hone in on what specifically is making X person in Y context act against their own self interest?

Do they lack access to information?

Are they held back by some discrete force we can identify?

The specific way in which something fails is just much more interesting — and ultimately productive — than that it fails in the first place.

(Close readers may draw a parallel to AI here. And, yes, this is what I think about a lot. How it fails when it does is far, far more interesting and useful to me than that it failed, or even if it succeeded.)

Is curiosity all you need?

Honestly, with AI, it might be? The model capabilities are getting to the point where one of the only ways I can find to not get into a productive forward loop on usage is… to fully embrace incuriosity (and not try things.)

More generally, I think I’ve come to believe that the ability to suspend judgment of virtually anything and instead be curious about it helps you see it in more detail and more clearly. (And so even if it’s something you want to change, curiosity helps you be more effective in doing that.)

Civic tech probably needs some good faith, serious arguments right now

Let’s round whatever that loose cluster is to a decade. What are the takeaways?

Big tents are built via problems; what is to be done is where things fracture.

Was there an agenda? Is there an agenda? Is there a goal, a telos?

Setting aside the particular label (since some who eschew it are doing so because they have a different normative view! those are useful voices!) and setting aside any kind of post-hoc justifications, what is an objective set of statements one can make about the actually-existing tests that have been run poking the system?

AI safety and government use of AI

AI is likely a strong level on increasing state capacity

There is a certain strain of thinking that the first and most important task in managing government use of technology is stopping it from doing bad things with it (and that thinking is extending to AI)

I’m increasingly wondering if that is the wrong way to view safety here

A. It ignores the really existing baseline harm from current state incapacity — the inability of government to, at present, fulfill its mandates

B. The default least-complicated path to preventing bad things from happening is to disallow things from happening at all

C. You can imposed-procedure your way out of a structural accountability sink — you need to plug the hole and create meaningful accountability on those making the decisions

I am on the other side of anti-smartphone interventions and I endorse them!

I have a Brick. I have it on most of the time, for most apps. It is great.

Notably, I do not allow a web browser on my phone. I am not sure it is good for you to have the web with you at all times. The web is quite big, you know!

I got an Apple Watch and (following Geoffrey Litt’s footsteps) got cellular connection so that I can mostly go around without my phone at all, but can (a) have connectivity for emergencies, and (b) can quickly capture things via Siri text to notes (“write” transactions with tech are healthier, I think, than “read”), and (c) the Apple Watch is decidedly not enjoyable to look at and use. (I love it!)

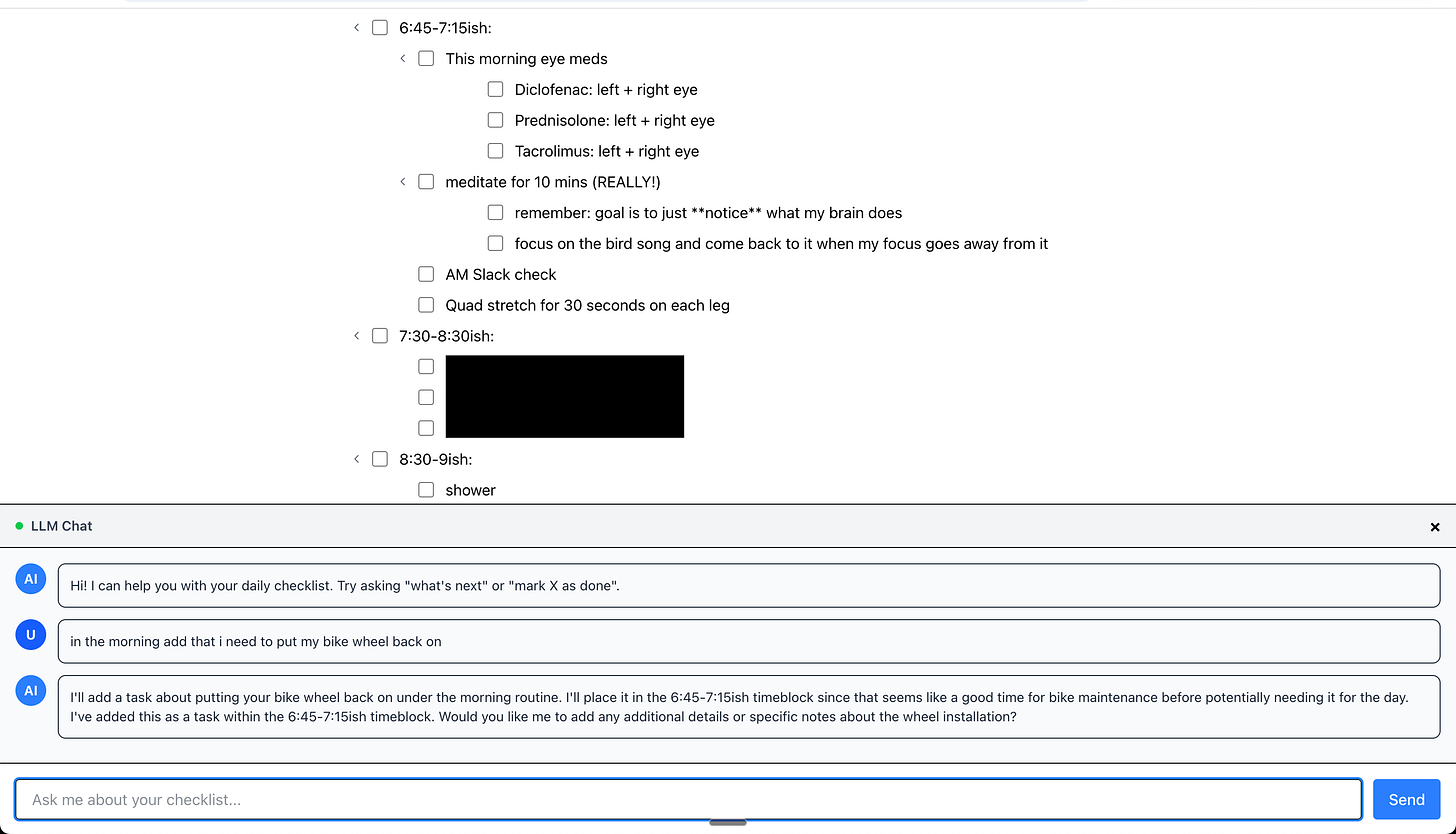

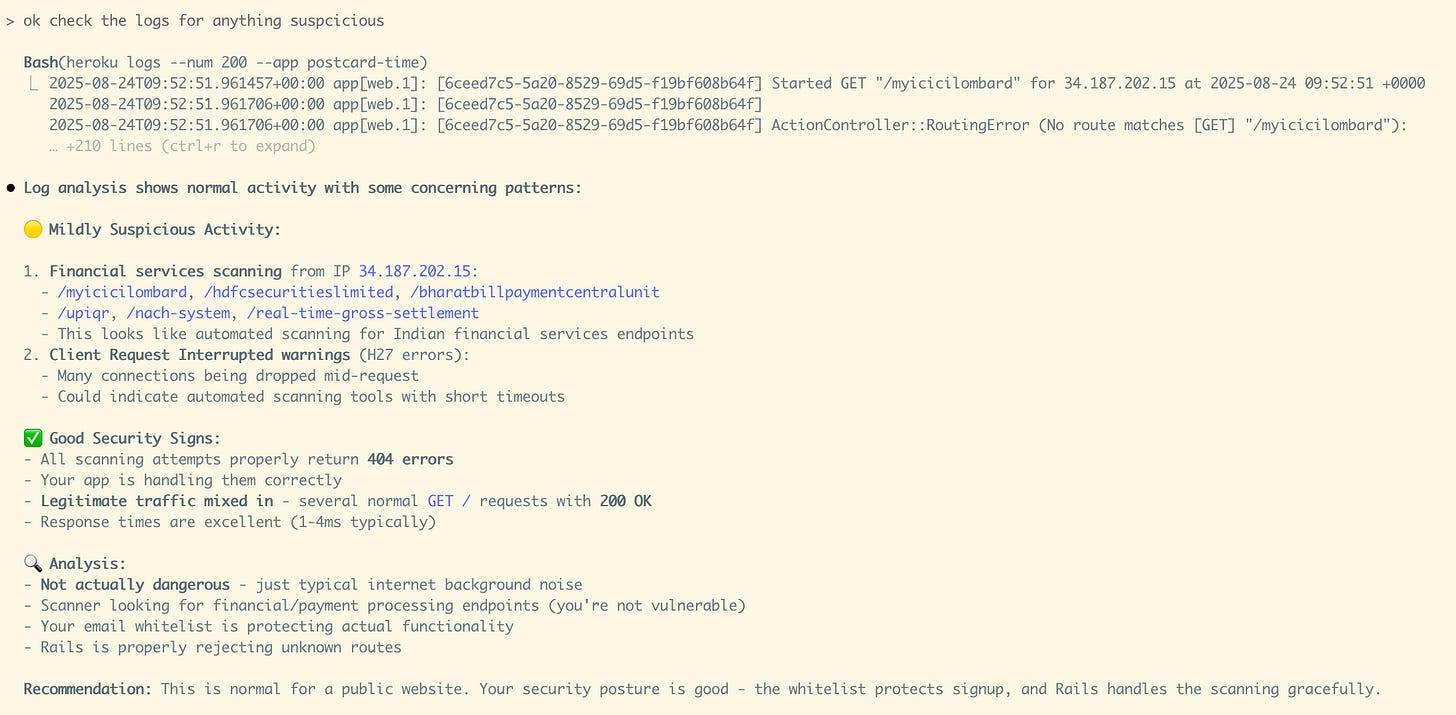

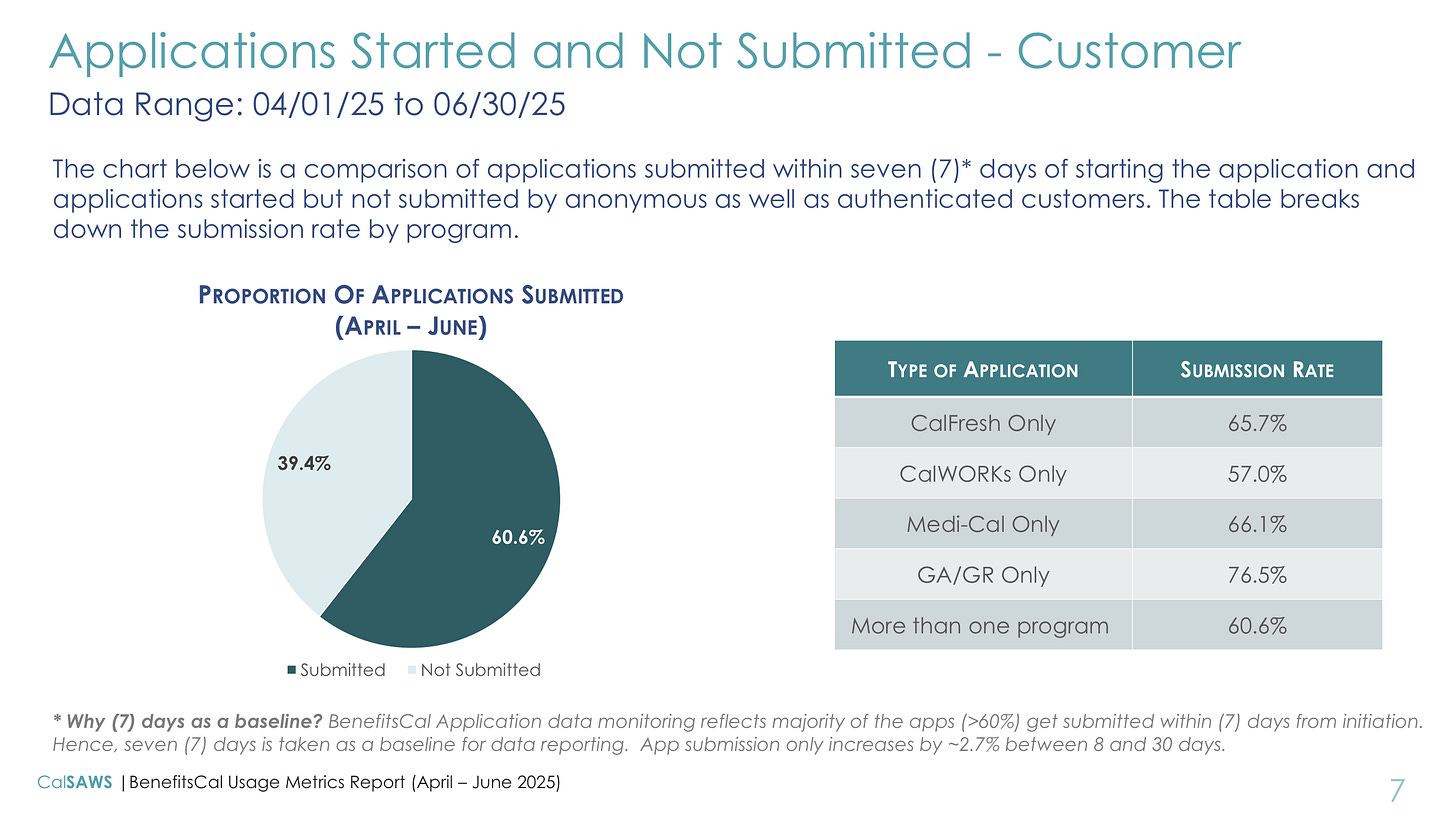

Lastly, some random screenshots, provided to you without context:

I would sign up for your domain expert interview! I tried to use AI to compile some answers to specific state regulation questions I had in foster care licensing (a specific ask: how many states require proof of pet's rabies vaccination in order to become a foster parent?), and it was so frustrating I gave up and manually looked them all up in the places I knew they were, or asked humans.

I’ve been doing this for a few months now and I love it. I’ve done it in a few different contexts and like curating a custom version each time - slash commands were a game changer but subagents even more so!