Using AI to make sense of policy documents

Also: how do we eval *human work* in govt, and a screenshot with *some* context

Note from Dave: A few weeks ago, my family had what would be described in delightfully bureaucratic argot of health policy as a “qualifying life event.” As planned, I’ve closed out my consulting work for a few months for paternity leave, and am likely to post a bit less. I still appreciate emails but am very likely to be slower to respond to them. Caveat emailor!

Using AI to make sense of policy documents

The ever-fun question of SNAP household composition

I've been experimenting with using AI to do "policy reasoning." That’s because fundamentally this is what staff have to do to answer questions about often complicated and complex1 rules.

Here is a fairly promising example using OpenAI’s ChatGPT Plus / GPT-4 model and feeding in:

SNAP federal regulations, paired with

a real, verbatim SNAP client question (from Reddit)

Let’s break it down.

First, I provide a prompt to steer the model:

Note two key parts:

Think step by step

Ask follow-up questions if necessary

(1) has been shown to improve reasoning in large language models. (2) is built in because it’s quite plausibly the case that the initial question lacks some information that is needed to analyze the case by the rules.

Then, I provide a (real! verbatim!) user question that I got from /r/foodstamps on Reddit:

I then provided the model the full policy language from the Code of Federal Regulations on the “Household Concept”

(Cutting off the rest because the regs are fairly long. But the whole thing fits in the context window AKA the actual text the model can directly use to answer my question!)

GPT-4 then responds with some sense making as requested.

I then provide more information about the key dimensions2

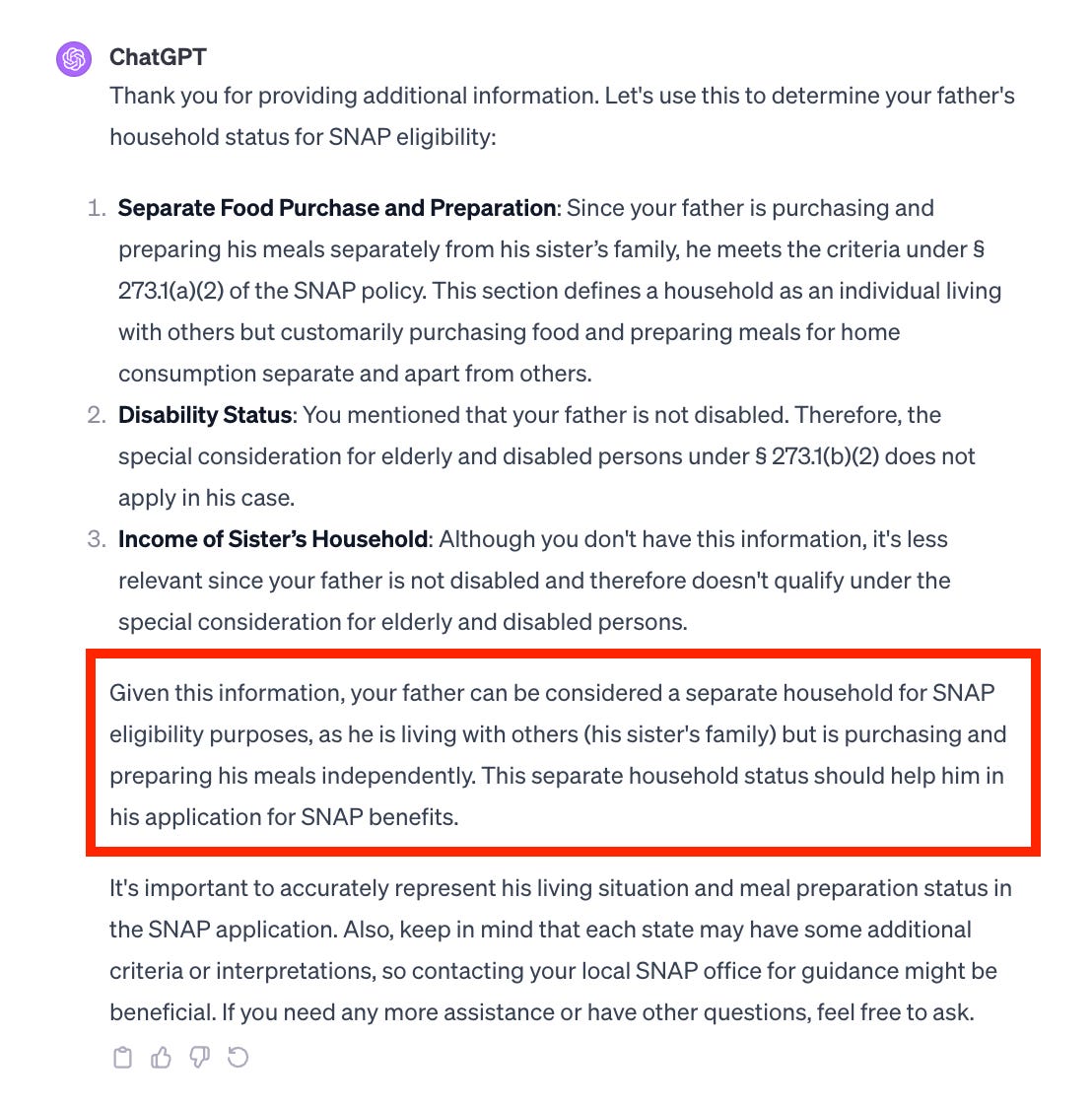

And we get an answer. And, as a SNAP guy, I’ll say this answer is pretty good! (I’ve boxed in red the key part.)

I think this more general approach — pairing a policy question in an end-user’s own language with the specific policy language that a government staff member would use to answer it — to be something of promise that I am working on experimenting and evaluating more.

There are definitely ways that this would need to be hardened to be used in something closer to a production (real users) environment. Though my first step would most likely be to have a human in the loop, with something like an email support desk or a content web site as the service channel. (That mitigates a lot of the risk of having it be instantaneous.)

Some of the ways I’d like to extend this:

Testing building in multiple levels (e.g. states + feds) of policy. Specifically in this case we are using federal regs, but every state has its own policies that fill in some of the gaps.3

Testing using a model to categorize a question by its relevant policy section, to be able to identify which policy language to pull up and include.

Harder cases, and in particular I’d love to stick with household composition, as it’s both bounded by also fairly complicated for lots of people’s actual situations!

The AI EO: shouldn’t we be looking at our (human) baseline of accuracy to guide our use of AI?

The AI EO also entailed a release of draft OMB guidance, accepting comments through December 5.

This week I tweeted a case I think is worth considering:

In general, I just see a few points here:

If AI really is promising at doing certain workloads or sub-tasks to deliver services, it seems to me we should rationally be looking at the cost of not using those. For example, today 44% of SNAP denials are erroneous either in substance or in how it was done. About 1 in 6 denials are fully inaccurate! That is a useful baseline to assess use of AI against.

When we have goals of timeliness and accuracy, we naturally have to make a tradeoff assessment. I have to ask the question: in cases where the agency staff are not able to process something like a benefits renewal timely (let’s say it’s 30 days and still no action taken) might we be willing to use AI to do that, given it’s reducing the real harm? It’s effectively a backstop to human work, rather than replacing it.

Appeals: in particular the EO itself and external folks are rightfully discussing how appeals to humans are necessary for use of AI in government. But here’s a question: what would we need to do to bolster the capacity for increased appeal volume? It strikes me that such policy and funding action is an important implementation corollary beyond just the more magic-words notion of “there should be an appeal path.” (I will confess I’m not sure that the fair hearing capacity in programs like Medicaid/SNAP/TANF could take a 3x increase in appeal requests!)

A screenshot with (a little!) context

Weird thing: it seems Palantir is submitting public comments on the federal register with, effectively, business development pitches? Below from recent USDA SNAP proposed rule making. (I can’t help but wonder if they used AI to help generate this!)

I am one of those pedants who finds the distinction important. Rules in a program like SNAP are complicated insofar as they have lots of branches and exceptions to exceptions, etc. But they are also complex insofar as they represent an underlying adaptive system of many different actors. To actually find a source of truth, you may be assessing information from many different organizations, from federal law to regs to a state policy handbook or training manual. You may even find that while an answer may be codified in these documents, when you call and speak to a staff member, you receive a different answer. This is not pernicious, but rather representative of the fact that these are much more fragmented than centralized systems we are talking about.

This is more or less just me filling in the gaps based on the comments/replies but also because in a real, production environment the user could directly answer these.

In fact, the federal regs themselves explicitly delegate this responsibility to states:

(c) Unregulated situations. For situations that are not clearly addressed by the provisions of paragraphs (a) and (b) of this section, the State agency may apply its own policy for determining when an individual is a separate household or a member of another household if the policy is applied fairly, equitably and consistently throughout the State."